Local LLM – AI on premise

AI is transforming how businesses operate. But when using cloud-based AI, sensitive company data often has to leave your infrastructure. For many organizations, this raises questions about security, privacy, and control.

That’s why running a Local LLM (Large Language Model) on-premise can be a game-changer.

Why Local LLM?

Data stays in your infrastructure

Your information never leaves your servers, ensuring full control and compliance with internal policies and regulations

Security and privacy by design

No exposure to external providers means reduced risks of leaks or misuse of sensitive business data

Customization to your workflows

Local models can be fine-tuned using domain-specific data so it understands your business language, industry terms, and unique processes

Cost efficiency at scale

Avoid recurring API costs by running AI workloads directly on your hardware

Agentic AI capabilities

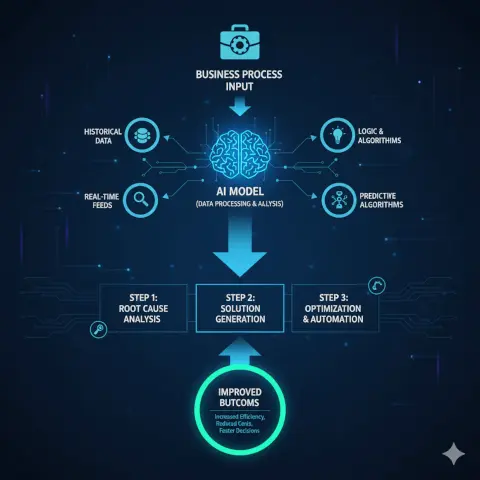

Beyond answering questions, Local LLMs can act as autonomous agents: planning, reasoning, and executing tasks across your systems. This enables smarter automation, decision support, and proactive assistance — all under your control.

Our Technology Stack

At Aulora AG, we implement Local LLM solutions using trusted open technologies such as:

Ollama

For lightweight local LLM hosting

n8n

For workflow automation and orchestration

MCP

(Model Context Protocol) for reliable integration

OpenWebUI

For a user-friendly interface